It is now 2024, and I’ve decided to write a post about Synapse Analytics in Azure.

I use Spark in Synapse to prepare data for analysis – primarily for the benefit of OLAP users and client applications.

I’ve been working with Spark in Synapse for a couple of years now.

This platform is best described as a “managed” environment for an audience of users who wish to focus on building Spark data applications without really understanding much about the management and configuration of a Spark environment.

This Spark platform is hosted within the Synapse portal, which is subsequently hosted within the Azure portal. At one point this was a VERY highly promoted platform for big data transformation and processing. But in late 2023 Microsoft has started moving their focus away from Synapse and they seem to be promoting another shiny new platform, namely “Fabric”. All of the Azure customers who were once encouraged to use Synapse are now being asked to take a look at “Fabric” instead. From what I can tell, there is little difference between the profile of a customer who would use notebooks in Synapse, and the profile of the customer who would use “Fabric” instead.

These two Spark offerings (Synapse and “Fabric”) have great deal in common. They both target customers who are “low-code” notebook developers, sometimes referred to as “citizen developers”. These customers are likely to build solutions with a combination of SQL and Python. While I appreciate the need to have development tools for every type of a software developer, I have mixed feelings about putting Apache Spark into everyone’s toolbelt. I believe that a data analyst or data scientist with a poor understanding of Spark is likely to abuse it and cause as many problems as they solve.

From where I stand, these two Spark offerings are not very good. The level of quality is low, and I suspect some of that is due to the fact that Microsoft thinks the target audience really can’t tell the difference. While a demanding customer might go to another mature Spark offering like Databricks or HD Insight, the ones who “don’t know any better” are likely to find their way to Fabric and Synapse .

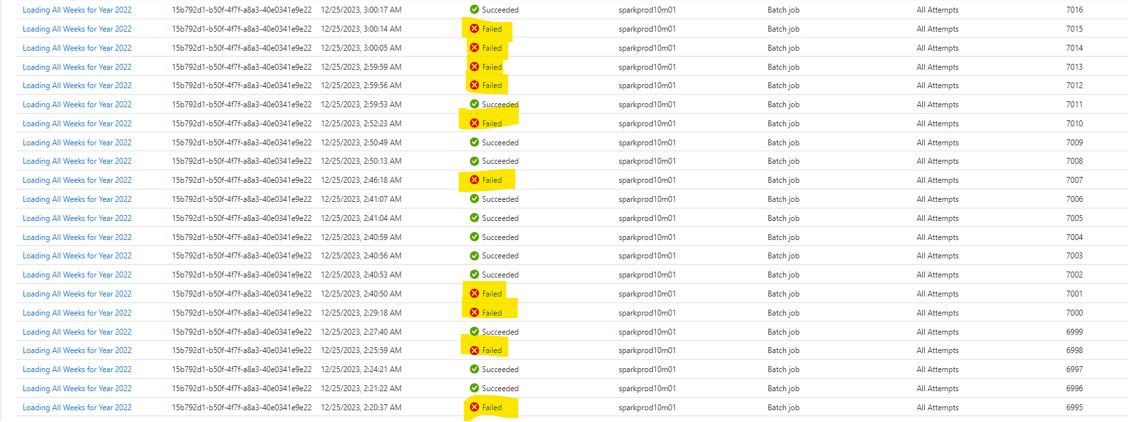

I will stop sugar-coating, and take things one step further in saying that the Spark offering in Synapse is a pretty terrible one. It is buggy and incredibly painful to use. The following is just one of many examples. Consider the following batch jobs that were submitted on Christmas day of 2023. They were submitted from 2:20 AM to 3:00 AM eastern time (USA). …

Notice all the green and red icons in the image above. At first glance you might assume that they modified the Spark portal to reflect the color theme for Christmas. The red and the green have the esthetic of a Christmas tree. However, when I’m digging a bit deeper I find that this is not what Microsoft had in mind. What is happening is that Synapse is throwing a massive fit as a result of failures that are happening in the underlying network. All of the failed jobs in this period of time are due to socket exceptions. The so-called “managed vnet” is wetting the bed and is causing what Microsoft likes to call “transient communication failures”.

Notice all the green and red icons in the image above. At first glance you might assume that they modified the Spark portal to reflect the color theme for Christmas. The red and the green have the esthetic of a Christmas tree. However, when I’m digging a bit deeper I find that this is not what Microsoft had in mind. What is happening is that Synapse is throwing a massive fit as a result of failures that are happening in the underlying network. All of the failed jobs in this period of time are due to socket exceptions. The so-called “managed vnet” is wetting the bed and is causing what Microsoft likes to call “transient communication failures”.

Is this a special gift that they’ve sent me because it is Christmas? Definitely not. Microsoft sends me this gift EVERY. SINGLE. DAY. I have dozens and dozens of socket exceptions on a daily basis. The socket exceptions have become a terrible plague. I have been experiencing them for as long as I used Synapse, but they seem to be getting worse. The failures happen on executors and drivers, in both custom code and Microsoft’s internal components. After investigating hundreds of them, they become very easy to recognize. Below are examples of the messages we find in our drivers and executors.

- Connection Reset by Peer

- Connection Timeout Expired. The timeout period elapsed during the post-login phase.

- Connect to vm-ef123456:41111 [vm-xyz/ww.x.yy.z] failed: Connection refused

Please note that these exceptions are NOT the result of connecting to a resource over the public Internet. Nor are they the result of a rare “glitch” in network connectivity. I’m simply connecting to private resources within the same Azure data region and I’m experiencing bugs in Microsoft’s VNET technology – in their “private endpoints” or MPE’s. There is no reason for this level of failure. The resources are in immediate proximity (~0 ms latency).

So how come I’m the only customer complaining? I think the answer is that I was duped into moving to a platform that targets a totally different type of customer than myself. I had originally moved to Synapse from Azure Databricks where I experienced virtually NO socket exceptions and, after making this change, the socket exceptions have become the reason for dozens of failures a day (about 99.9% of my failures, and about 3% of my overall Spark jobs). I’m not sure if this affects me more than other customers. If so, my guess is that the other customers of Synapse Analytics do not run the same volume of jobs thru their environment as I do. Or perhaps the other customers have a high level of their own bugs that cause failures, and perhaps the noise created by their own bugs is making it more difficult to identify all the network failures which are the fault of the Synapse platform itself.

I do not intend to remain a Synapse Spark customer for much longer. One of my New Year’s resolutions is to find a more reliable Spark platform to host our workloads. I suspect what I may do is to return to Azure Databricks, or perhaps to HD Insight. However, as long as I’m a Synapse customer I intend to share what I know about this platform. I will try to share the good things too, when possible.

Hopefully this post was helpful to some other struggling Synapse customers. If you are also suffering from a plague of socket exceptions, then you may be happy to at hear that you aren’t alone.

As a final note, I would assure everyone that I’ve reported these socket bugs to Microsoft MANY times and they are not sympathetic. Once again I think the main reason for this is because the platform is a poor one, it was built for a different target audience, and it was probably not tested for a high volume of work. Insofar as the support incidents are concerned, I wont go into the details right now. Each of those support incidents is a story in its own right. However I plan to tell those stories soon, if there is interest.

Wishing everyone my best in 2024!